Key Metrics for Senior Engineers to Track Productivity

If you cannot track, you cannot improve

This is a paid newsletter, and only 50% of the article is accessible to free subscribers

If you’re not already a paid subscriber, consider upgrading to get maximum benefits and put your career growth on steroids

Back to the main topic,

When I was a mid-level engineer, I used to work hard, write code, fix bugs, and help others.

But even after months, I couldn’t clearly tell what had improved or how my work made the team better.

That’s when I was advised by my mentor to start tracking all things I do.

Not to measure hours or output, but to understand impact.

What I was doing well, what I was ignoring, and where I was wasting effort.

If you cannot track, you cannot improve

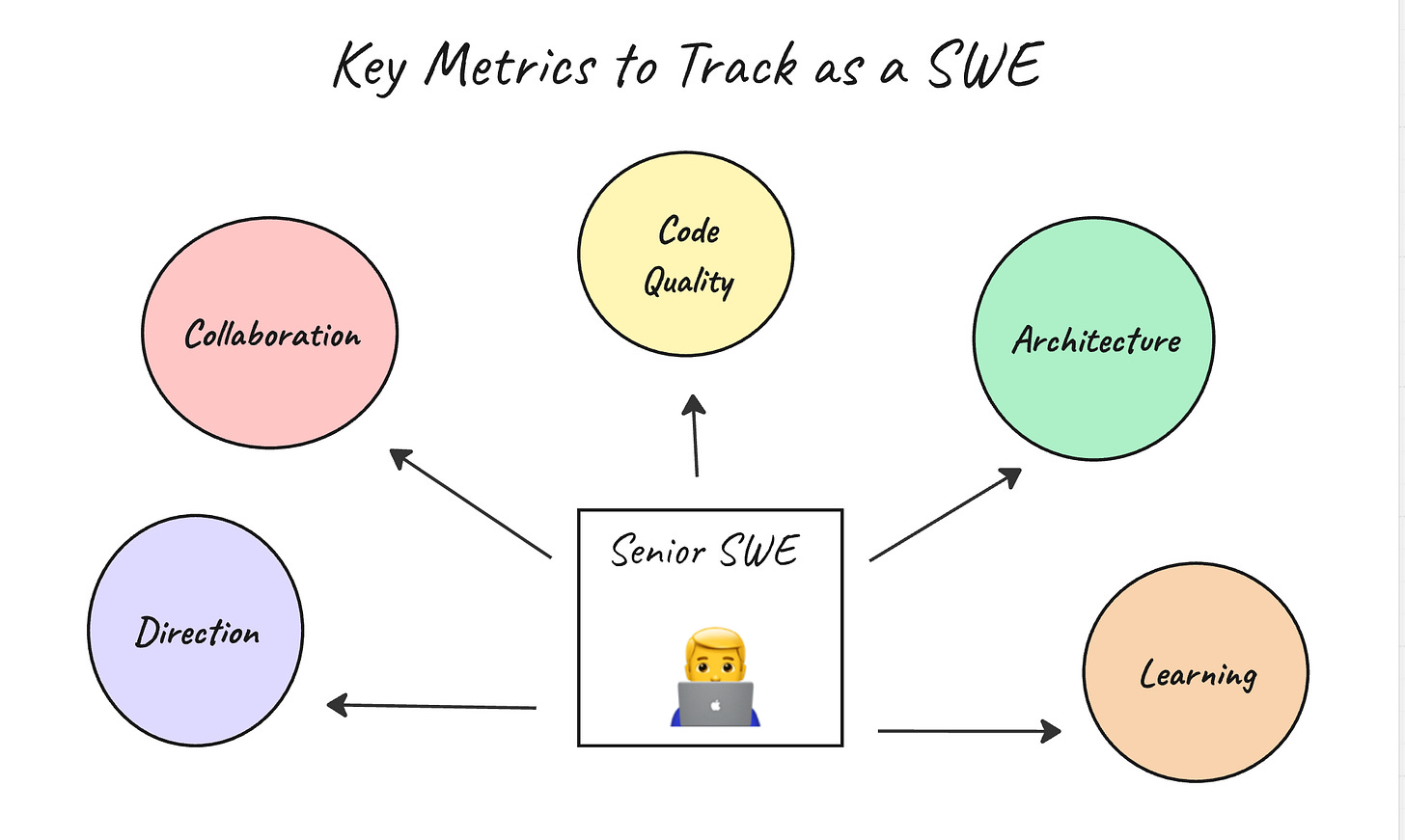

As a senior engineer, your job is not just to write good code but to improve the team, the system, and the process.

In this newsletter, I am sharing how I track my work every six months and how you can do it too.

1. Code Quality and Review Metrics

Code Review Speed

I track how long it takes to review pull requests.

My personal rule is to review everything assigned to me within 24-48 hours.

Delayed reviews block others from shipping and slow down the entire team.

Even a small habit, such as reviewing daily before stand-up or having a focused block to review code, can make a big difference.

How I implement:

Check assigned PRs every morning

If I can’t review fully, leave a note like “Will review tomorrow”

Make it part of my daily routine, not a random task

On days in which there are fewer meetings, I add focused meeting blocks to review

PR Size and Complexity

I note how big my PRs are and how many revisions they take before landing.

Smaller PRs get faster reviews and fewer bugs later.

How I implement:

I try for focused, smaller PRs with a single purpose

Don’t wait too long to merge; break big changes into parts

Keep a note if my PRs often cross 500 lines or multiple files

If your PRs are always large, it may mean you are over-scoping tasks or batching too many changes together.

Bugs After Release

Every time a bug or SEV is linked to my code, I write it down.

I don’t do it for blame, but to find patterns.

If the same type of issue appears frequently, it means something in my process needs to be fixed.

Maybe I skip certain tests or don’t validate enough edge cases.

How I implement:

Maintain a simple doc with “Date, Issue, Cause”

Review it once every two months

Don’t focus on the number of bugs; focus on patterns

2. Architectural and System Metrics

System Uptime and Stability

As a senior engineer, your design choices affect stability.

I keep a note of how often my systems break, how many SEVs were filed, and what caused them.

High stability means your architecture decisions are working.

Frequent failures mean something is weak.

How I implement:

Review incident reports every quarter

Note if the same components keep failing

Fix root causes, not just symptoms

Scalability and Performance

I track how my systems behave as traffic or usage grows.

This includes latency, throughput, and performance under stress.

Scalability is not about over-engineering. It’s about knowing your limits.

How I implement:

Run a small load test before major releases

Track latency or query performance after new features

If numbers degrade over time, plan a cleanup or optimization cycle